Chapter 1 What is Sequence Data ?

1.1 Biological sequences, a primer

To fully understand the work that was done during this thesis, as well as the choices that were made, some basic knowledge of molecular biology and genetics is needed. If you are already familiar with biological sequences, feel free to skip ahead to section 1.2.

1.1.1 What is DNA ?

DesoxyriboNucleic Acid (DNA) is one of the most important molecules there is, without it complex life as we know it is impossible. It contains all the genetic information of a given organism, that is to say all the information necessary for the organism to: 1) function as a living being and 2) make a perfect copy of itself. This is the case for the overwhelming majority of living organisms on planet earth, from elephants to potatoes, to micro-organisms like bacteria.

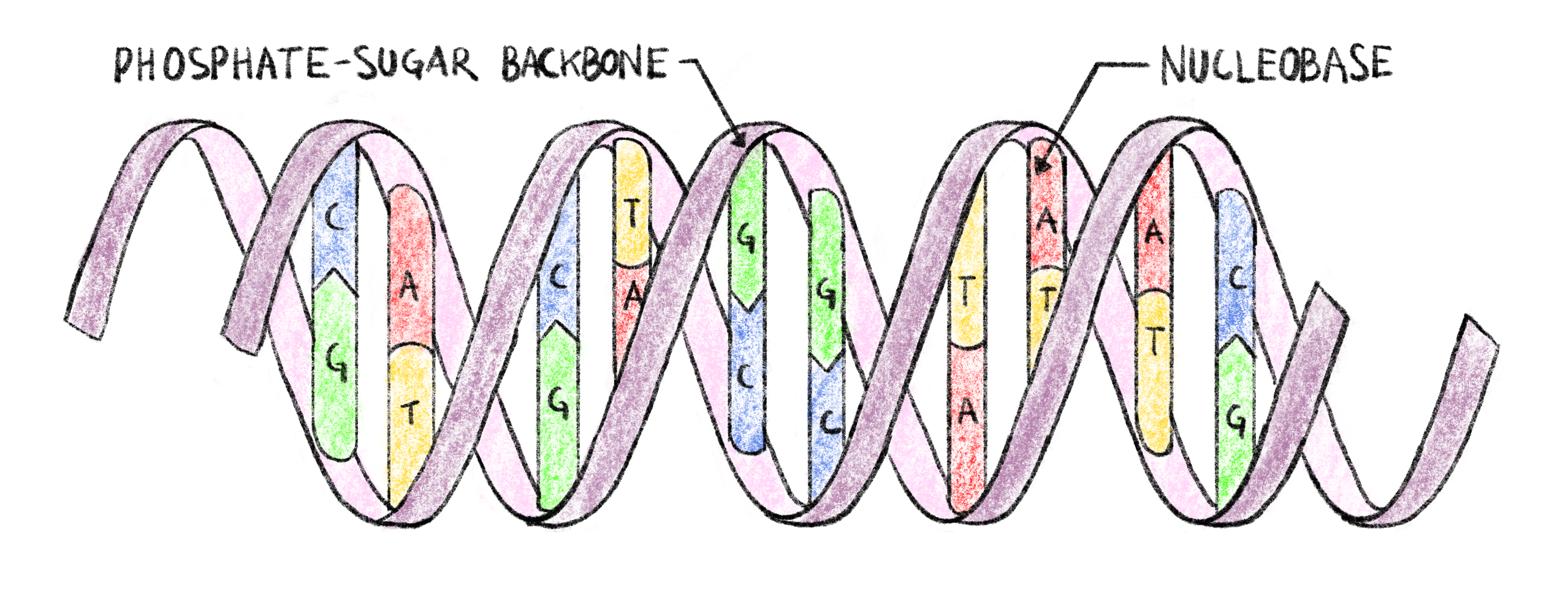

DNA is a polymer, composed of monomeric units called nucleotides. Each nucleotide is composed of ribose (a five carbon sugar) on which are attached a phosphate group as well as one of four nucleobases: Adenine (A), Cytosine (C), Guanine (G) of Thymine (T). These four types of nucleotide monomers link up with one-another, through phosphate-sugar bonds, creating a single strand of DNA. The ordered sequence of these four types of nucleotides in strand encodes all the genetic information necessary for the organism to function. Nucleotides in a strand form strong complementary bonds with nucleotides from another strand, A with T and C with G. These bonds allow two strands of DNA to form the double-helix structure of DNA1 shown in Figure 1.1. The specificity of nucleotide bonds ensure that the two strands of the double helix are complementary and that the information contained in one strand can be recovered from the other. This ensures a certain structural stability to the DNA molecule and a way to recover the important information that could be lost due to a damaged strand.

Figure 1.1: Double-helix structure of DNA.

Each strand of DNA has a phosphate-sugar backbone on which are attached nucleobases. The two strands are linked by complementary bonds between the nucleobases of different strands (A bonding with T and C bonding with G), encoding the same information of both strands.

The amount of DNA necessary to encode the information varies greatly from organism to organism: 5400 base pairs (5.4kBp) for the \(\varphi X174\) phage2, 4.9MBp for Escherichia coli3, 3.1GBp for Homo sapiens4 all the way up to almost 150GBp for Paris japonica, a Japanese mountain flowering plant5. While very small genome size tend to occur in smaller, simpler organisms, genome size does not correlate with organism complexity6.

1.1.2 From Information to action

1.1.2.1 Proteins, their structure and their role

The double stranded DNA molecules present in the cells of a living organism contain information only; in order for the organism to live, this information must be read and translated into actions. Most of the actions necessary for “life” are taken by large molecules called proteins, they have a very wide range of functions from catalyzing reactions in the cell to giving it structure7.

Proteins are macromolecules that are made up of one or several chains of amino acids. These chains then link together and fold up in a specific three dimensional structure, giving the protein the shape it needs to fulfill its goal. This structure is determined by the sequence of amino acids, and a given protein can be identified by this amino acid sequence7.

This sequence is directly dependent on the information contained in the DNA. First the DNA is transcribed in a similar, but single stranded, molecule called RNA (Ribonucleic Acid) which encodes the same sequence. This RNA molecule is then translated into a protein by the following process8:

- Nucleotides in the RNA sequence are read in groups of three called codons.

- These codons are read sequentially along the RNA molecule.

- Each codon corresponds to an amino acid, according to the genetic code.

- The sequence of codons in RNA (and by extension DNA) determines the sequence of amino acids.

- The translation process is stopped when a specific type of codon (a “Stop” codon) is read.

With four types of nucleotides and codons grouping three nucleotides there are \(4^3=64\) possible codons. However, as stated above, proteins are only made up of 20 different amino acids, meaning that several different codons correspond to the same amino acid. This gives the translation process a certain robustness to errors that can occur when the DNA is copied to create a new cell, or when it is transformed into RNA prior to protein translation.

The portion of DNA that is read to create the protein is said to be “coding”, and is called a gene. There are several thousands of genes in the human genome9 resulting in proteins executing thousands of different functions in a cell. In human beings, coding DNA represents only 1% to 2% of the total genome10,11. The large majority of the DNA in a human being is not translated into proteins, a portion of it has a regulatory role, controlling transcription and translation, but the role remains unknown for the rest of the human genome12,13.

1.1.2.2 Making mistakes

Going from DNA sequence to protein is quite a complicated process involving several steps, it is therefore possible for a mistake to happen. There are several mechanisms to avoid mistakes and alteration of the genetic information: the complementary nature of the two strands of DNA, the redundant nature of the genetic code as well as error correction mechanisms in the molecules (called “polymerases”) that read and write DNA and RNA being some of them. Despite all that, some errors in the nucleic acid (DNA and RNA) or protein sequences still make it through, these are called mutations.

1.1.2.2.1 Where can mistakes happen ?

There are several sources of error that can alter genetic information14:

DNA replication: When a cell divides, or when an organism reproduces, the DNA molecule must be copied in order to preserve and transmit genetic information. This process has a very low rate of errors, with as low as one error for every billion to every hundred billion of replicated base pairs15. This is due to the fact that the DNA polymerase (the protein responsible for copying DNA molecules), has a relatively low error rate to start with, but mostly to the error correcting mechanisms that are present in certain cells and bacteria16.

RNA transcription: Since errors in RNA transcripts are less important than in replicated DNA, RNA polymerases have a much higher error rate than their DNA counterparts. This error rate has been estimated to be between four errors for each million17 to two errors for each hundred thousand18 transcribed bases.

Protein translation: The process of translating RNA to a protein is done by proteins called ribosomes. This is a very error prone process with a mistranslation rate estimated to be of the order of one error for every 10,000 codons translated19

Other mutagenic events: Many external events and factors have been shown to provoke mutations in exposed DNA such as Ionizing radiation20, UV rays21, toxins22, heat stress23, cold stress24 or oxidative stress25.

While RNA transcription and protein translation are much more error prone processes than DNA replication, the errors induced only alter the expression of the genetic information. The effects of these errors are localized to the cells where they happen and are not transmitted to offspring. However these transcription errors are not unimportant and increased transcription error rates have been hypothesized to cause severe neurological symptoms in pediatric cohorts26.

1.1.2.2.2 What kind of errors are possible?

In biological sequences (nucleic acids and proteins), mutations can result from one of three error modes:

- Substitutions, where the original base unit (nucleotide or amino acid) is mistakenly replaced by another one, for instance inserting an A instead of a G during RNA transcription.

- Insertions,where a new base unit not present in the original sequence is added to the newly synthesized biological sequence.

- deletions, where a base unit from the original sequence is skipped and not taken into account when synthesizing the new sequence.

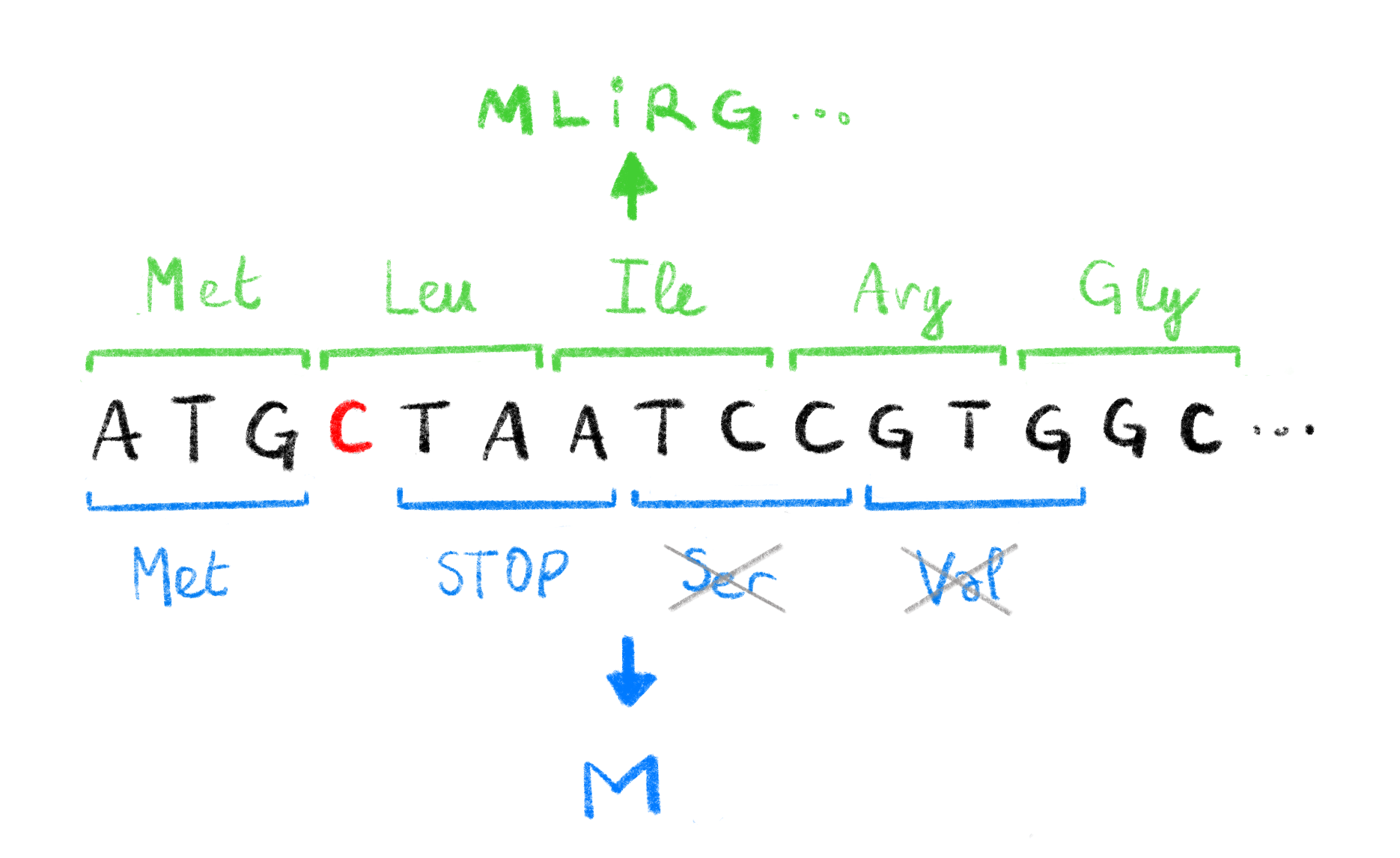

While these three types of errors occur both in nucleic acids and proteins there are some things to consider about the consequences of nucleic acid mutations on protein synthesis. Due to the redundant nature of the genetic code mentioned in Section 1.1.2.1, some substitutions in the nucleic acid sequence will result in the same protein sequence and therefore not have altered protein activity. Some mutations however will result in a substitution at the amino acid level which could potentially lead to a physicochemically altered or even non-functional protein. Finally, insertion and deletion errors (collectively called indels) can have big consequences on resulting proteins. Inserting or deleting nucleotides in multiples of three will result in the insertion/deletion of amino acids in the protein, any other length of indel will result in what is called a frameshift mutation27. These mutations causes changes in all the codons following the mutation, potentially resulting in a completely different amino-acid sequence, including premature stop codon apparition as shown in Figure 1.2.

Figure 1.2: Effect of frameshift mutations.

The deletion of a single C (highlighted in red) in the original DNA sequence leads to a change in the codons read during translation. The original codons (shown in green, with corresponding amino acids, above the sequence) translate to a functional protein MLIRG.... The new codons caused by the deletion (shown in blue, with corresponding amino acids, below the sequence), induce a premature STOP codon leading to a non-functional protein M. The Serine and Valine codons are not translated due to the STOP codon.

1.1.2.2.3 What effect can mutations have ?

As we stated above, some mutations in DNA may have no repercussions, some others can lead to non-functional proteins. In some cases mutations can be associated with a trait in the mutated individual. For example a single mutation in a gene linked with coagulation can lead to pathological Leiden thrombophilia28, a single amino acid deletion in the CFTR protein leads to (the very deadly) cystic fibrosis29, and many mutations have been linked to complex diseases like type 2 diabetes30,31. All mutational effects are not necessarily bad for the organism though, and mutations are essential for bacteria32 or viruses like HIV33 to develop resistance to treatment (more on that in Chapters 5 and 6).

While some mutations, have had their mechanisms and consequences thoroughly studied, in many cases mutations are simply linked to a trait. Since it is easier to show correlation than causation, and that the former does not necessarily imply the latter, it is important to further study mutations of notice to understand their potential consequences.

1.2 Obtaining sequence data

In many fields, especially in computational biology, we need to know what genetic information the studied organism has. That is to say: what is the exact sequence of nucleotides that make up its DNA? The process of figuring out this sequence is, perhaps unsurprisingly, called sequencing. A sequence that is produced by this process is called a sequencing read or, more commonly, just a read.

1.2.1 Sanger sequencing, a breakthrough

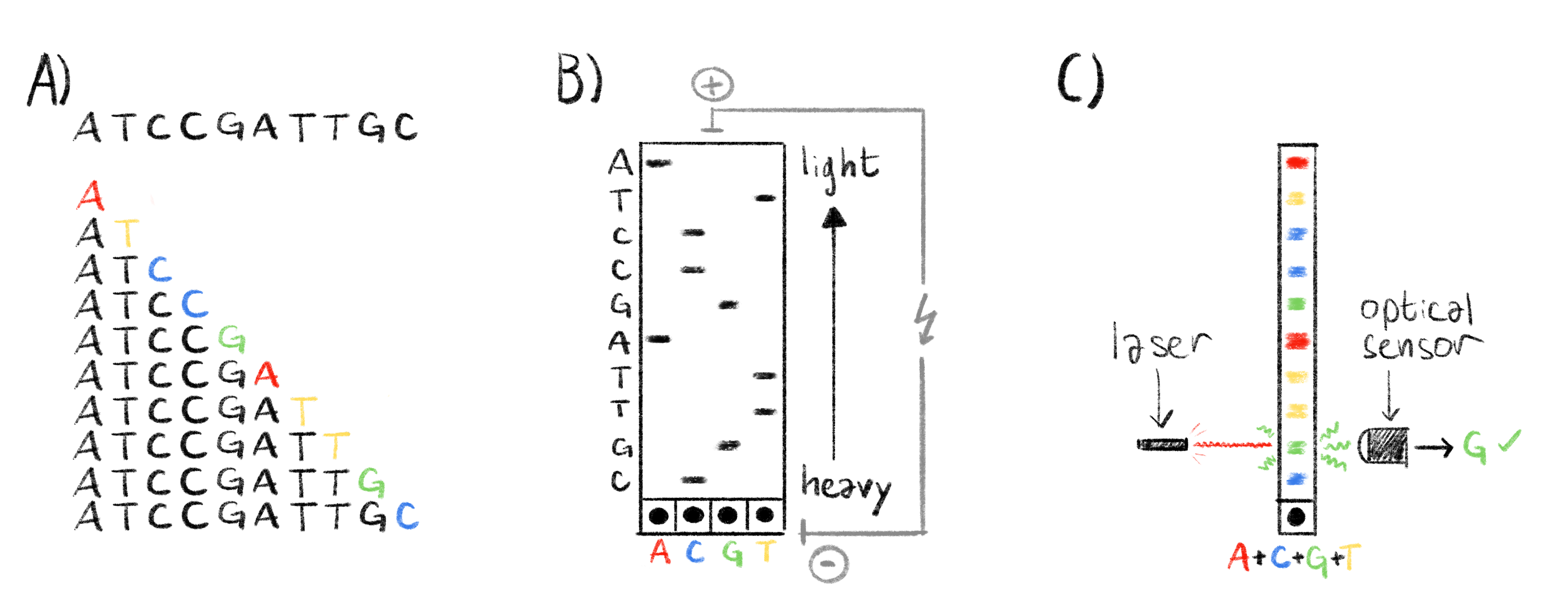

The first widely used sequencing method was developed in 197734. Sanger et al. devised a simple method to read the sequence of nucleotides that make up a DNA sequence known as chain termination sequencing or simply Sanger sequencing (represented in Figure 1.3). Although this method is now mostly obsolete, it established some key concepts in sequencing, some of which are in action in the most modern sequencers.

To understand Sanger sequencing, one must first understand how to synthesize DNA. As we stated in Section 1.1.1, DNA is built up from building blocks that we called nucleotides, more specifically deoxynucleotide triphosphates or dNTPs. These dNTPs are made up of a sugar (deoxyribose), a nucleobase (A, T, G or C) and 3 phosphate groups. By successively adding these dNTPs at the end of an existing DNA molecule, we extend it, linking one of the phospates of the dNTP to an oxygen atom on the last nucleotide of the DNA molecule. Let us now consider a dideoxynucleotide triphosphate (ddNTP), which is identical to a dNTP except we remove a specific oxygen atom. This ddNTP can be added to the growing molecule of DNA like regular dNTPs, but since it is missing that one oxygen atom no more dNTPs or ddNTPs can be added to the DNA molecule after this one. The elongation is terminated and we call these ddNTPs chain-terminators. This combination of DNA synthesis followed by termination are at the heart of Sanger sequencing.

It is important to note that dNTPs and ddNTPs refer to nucleotides with any nucleobase. We can refer to specific dNTPs by replacing the “N” with the base of choice. For example, dATP refers to the dNTP that has adenine as a base. Similarly we have dCTP, dGTP and dTTP (as well as ddATP, ddCTP, ddGTP and ddTTP).

- The first step of Sanger sequencing (and most sequencing methods) is to amplify the DNA molecule we wish to sequence, i.e. make many copies of it (usually through a process called PCR). These clones of the sequence are then separated into their two complementary strands one of which will be used as a template for the sequencing steps.

- The second step is to prepare 4 different sequencing environments (think of it as 4 test tubes). In each environment we introduce an equal mix of the 4 dNTPs, that will be used to elongate new DNA molecules from the amplified templates, and a single type of ddNTP. So in the first test tube we will have only ddATP, ddCTP in the second, et cetera. In addition, these ddNTP are marked, at first with radioactive isotopes, and later on, as the technology matured, with dyes. This marking means that we can observe the location of these ddNTPs later on.

- Then an equal portion of the template is introduced in each environment with DNA polymerases (that will add the nucleotides to elongate a sequence that is complementary to the template), and short specific DNA molecules called primers that are necessary for the polymerases to start synthesizing new DNA.

- During synthesis the chain is elongated with dNTPs by the polymerase and the reaction stops once a ddNTP is incorporated. At the end of this process we have plenty of fragments of DNA in each test tube, and we know that these fragments end with a specific base in a given environment. For example, in the test tube where we added ddATP, we know that all the fragments end with an A, and that we have all the possible fragments that start at the beginning of the template and end with an A. If the template is AACTA, then the fragments we would get in the ddATP test tube would be A, AA, and AACTA.

- Then, a sample from each environment is taken and deposited on a gel, each in its own lane. A process called electrophoresis is then used to separate the fragments according to their weight. By applying an electrical current to the gel, the fragments of DNA will migrate away from where they were deposited along their lane in the gel. Lighter, shorter DNA fragments will travel further than heavier ones. We then get clusters of fragments ordered by weight (and therefore by length) called bands. With the marked ddNTP we can reveal these bands in the gel.

- We know that: 1) bands are ordered by length; 2) consecutive bands correspond to the addition of a single nucleotide; 3) in a specific lane fragments corresponding to a band end with a specific base. This knowledge is enough to deduce the sequence of the template. An example gel is shown in Figure 1.3.

This process allowed Sanger et al. to sequence the first genome, of a \(\varphi X174\) bacteriophage, in 19772. Although revolutionary, this method was costly, time consuming and labor intensive. Adjustments to this method were made in order to make it faster and less expensive. An important step was to change the way ddNTPs were marked. By using fluorescent markers, each base having a distinct “color”, we can eliminate the need to have 4 different environments and lanes in the gel35,36. This also paved the way for automating sequencing, each fluorescently marked band can be excited with a laser, and the resulting specific wavelength can be recorded by optical systems and the corresponding base automatically deduced37 (Also see Figure 1.3). Other improvements were made such as using capillary electrophoresis instead of gel electrophoresis.

Figure 1.3: Overview of the sanger sequencing protocol.

A) The sequence to read and all the generated fragments, with highlighted ddNTP chain terminators, ordered by molecular weight (i.e. length). B) Classical Sanger sequencing. The fragments are separated by electrophoresis and the lighter fragments travel further from the wells at the botom of the gel. Each lane in the gel correpsonds to a specific ddNTP. The radioactivly marked ddNTPs appear as black band in the gel and we can reconstruct the sequence by reading the bands from top to bottom, the column in wich the band appears indicating which base is at each position. C) Automated Sanger sequencing. The fragments are also separated by electrophoresis, as in panel B. Chain terminators are marked with fluorescent markers. When excited by a laser, each ddNTP emits a specific wavelength. This is read by an optical sensor and the corresponding ddNTP is recorded. By exciting each band we can quickly deduce the sequence.

These gradual improvements to the Sanger sequencing protocol made it possible to sequence longer and more accurate reads, with the latest technologies resulting in reads reaching 1 ,000 base pairs with an accuracy of 99.999%38. These improvements also resulted in a lower cost for sequencing, which was greatly decreased from around $1000 per base-pair39 to only $0.5 per kilobase38. Finally these technological improvements also increased the throughput of sequencing machines from around 1 kilobase per day39 to 120 kilobases per hour40.

Despite these improvements, for ambitious endeavors such as the human genome project, sequencing was a massive undertaking: the first human genome is estimated to have cost between 500 million and 1 billion US dollars to sequence41.

1.2.2 Next-generation sequencing

Thanks to these large sequencing projects and the genomics field in general, the richness and usefulness of sequence data was made ever more apparent. This growing need of sequence data ushered in a new era of sequencing with the development of many new sequencing methods designed to have a higher throughput and a lower cost than Sanger sequencing. This second generation of sequencing technologies is often referred to as Next-Generation Sequencing (NGS) or Massively parallel sequencing. While there are different technologies, they share a few common key points42:

As with Sanger sequencing, we first need to amplify and clone the DNA template. However, since these technologies result in shorter reads than Sanger sequencing, the DNA we want to sequence must first be randomly broken up into small template fragments before being amplified.

The amplified template fragments are attached to some sort of solid support, resulting in a physical support with billions of template fragments attached to it.

As in Sanger sequencing, DNA molecules, complementary to the template fragments, are elongated. This happens for billions of fragments at the same time (hence the “massively parallel” epithet).

The addition of specific nucleotides to a chain are detected in real time, and there is no permanent chain termination. There is no need for the long step of electrophoresis. These detections are simultaneous for all the molecules being elongated at once.

The result of these steps is a very large number of short reads. With data analysis these short reads can be used to deduce longer sequences and eventually a fragmented approximation of the original whole genome sequence through a process called assembly.

The main NGS method is called “sequencing by synthesis”, developed by a company: Illumina. It is commonly referred to as Illumina sequencing. This method is based on reversible chain terminators, developed at the Institut Pasteur in the 90’s43. These are marked dNTPs that can be used to elongate DNA molecules, but that have an additional molecular group that makes them terminators by default. However this terminating group can be removed once the NTP is included in a DNA molecule allowing the elongation process to continue. These dNTPs are fluorescently marked and when excited with a laser they emit light with a distinctive color. During Illumina sequencing, these reversible chain terminators are included to millions of fragments at the same time, stopping elongation. At this point all the fragments are excited with a laser and an optical system takes a picture of the emitted colors for all the fragments at once. In this image, a pixel loosely corresponds to a sequenced fragment, and its color to the most recently added dNTP. The terminating groups are then cleaved and the process can start over by incorporating a new batch of reversible terminators. By observing the successive images we can deduce the sequence of added nucleotides for each sequenced fragment and obtain all of our reads.

Another NGS method is called pyrosequencing, commercialized by 454 Life Sciences. Contrary to Illumina sequencing, this method does not use reversible chain terminators. Instead it uses a special enzyme called luciferase that emits light as specific dNTPs are added. This process is repeated for the 4 dNTPs (similarly to Sanger sequencing) and from the light emissions we can deduce the sequence of nucleotides44.

These technologies yield reads around 150 nucleotides for Illumina and 400nt for pyrosequencing45. This is much shorter than the 1kB reads obtainable with the latest Sanger sequencing technologies. However the throughputs are much higher40: 2.5 to 12.5 Gigabases per hour for Illumina and 30 Megabases per hour for pyrosequencing. Costs are also quite low: $0.07 and $10 per Megabase for Illumina and pyrosequencing respectively. The per-base sequencing accuracies are also quite high, up to 99.9% for both Illumina46 and pyrosequencing40. A summary of the key characteristics for various sequencing technologies can be found in Table 1.1. The lower cost and higher throughput has made the Illumina sequencing technology the dominant one. The company estimates that 90% of the world’s sequencing data was generated with Illumina machines in 201547.

1.2.3 Long read sequencing

Although NGS technologies revolutionized the sequencing world, recent efforts have been made to get longer reads. These third-generation methods generate reads of tens of kilobases and are commonly called long-read sequencing method. Long reads have a host of applications48 for which short NGS reads might not be well suited: De novo assembly of large complex genomes, studying complex repetitive regions such as centromeres or telomeres or detection of structural variants. They have recently been used to assemble the first truly complete human genome, including telomeric and centromeric regions4.

The two available long read technologies are: Single Molecule Real Time sequencing (SMRT), commercialized by Pacific Biosciences (PacBio) and Nanopore sequencing, commercialized by Oxford Nanopore Technologies (ONT). While these technologies are quite different, they both result in much longer reads than even Sanger sequencing in real time, without the need for chain terminators or separate sequencing reactions, all with a high throughput and at a reasonably low cost.

SMRT sequencing was first developed in 200949, before being commercialized and furthered by PacBio. The basic principle is as follows:

- Fragment and amplify DNA to obtain a very large number of DNA templates.

- Link both strands of each DNA template together with known sequences called bell adapters. Denature the DNA to create a single stranded, circular DNA molecule.

- Primers and polymerases are attached to the circular molecule specifically on one of the bell adapters.

- Add the circular DNA template, primer, polymerases complexes to a SMRT chip. This chip is essentially a large aluminium surface with hundreds of thousands of microscopic wells called Zero-Mode Waveguides (ZMWs) only 100nm in diameter50. The polymerases are chemically bonded to the bottom of each of these ZMWs so we effectively get a single DNA template and polymerase per well.

- Fluorescently marked dNTPs are incorporated progressively in each of the wells. When a marked dNTP is incorporated in the newly synthesized DNA brand, light of a specific wavelength is emitted.

- The size of these ZMWs make the detection of the fluorescence possible with an optical system. Incorporation of dNTPs in each ZMW can be detected simultaneously in a parallel fashion and the resulting sequences deduced.

Nanopore sequencing, thought of in the eighties, further developed along the years51 and first commercialized by ONT in 201452, is completely different from all the sequencing technologies previously mentioned. Where all the other ones are based on synthesizing a complementary DNA strand and detecting specific dNTP incorporation in some way or another, there is no synthesis in nanopore sequencing. The principle relies on feeding a single strand of a DNA template through a small hole in a membrane, a nanopore, at a controlled speed. As the nucleotides go through the nanopore, an electric current is formed between both sides of the membrane. This current can be measured and is specific to the succession of 5 to 6 nucleotides inside the nanopore channel at any given time. By looking at the evolution of the electric current as the DNA strand goes through the nanopore, we can deduce the sequence of nucleotides through a process called base calling. Base calling is usually done with machine learning methods, mainly artificial neural networks53. In the flow cells used in ONT sequencers, there are hundreds of thousands of nanopores, spread out over a synthetic membrane, allowing for massively parallel sequencing as well. Theoretically, since this method is not based on synthesis, the upper limit for read length is only limited by the length of the template, and in practice ONT sequencing produces the longest reads.

Both technologies yield long reads, the median and highest read lengths being 10 kilobases and 60 kilobases respectively for PacBio sequencing54. For nanopore the median read lengths of 10 to 12 kilobases55,56 are similar to PacBio, but in it can also yield ultra-long reads of 1 up to 2.3 megabases long57–59. The length of the reads and parallel nature of these two technologies allow these sequencers to have truly massive throughputs. PacBio sequencers can sequence between 2 and 11 gigabases per hour and ONT from 12.5 gigabases per hour, up to a staggering 260 gigabases per hour using the latest ONT PromethION machines56. The cost of sequencing with these machines, while higher than for Illumina sequencers, remains reasonably affordable at $0.32 and $0.13 per megabase for PacBio and ONT respectively60. These characteristics are summarized in Table 1.1 along with other sequencing technologies.

The length, throughput and sequencing cost of both these technologies paint a pretty picture, and indeed they have proved useful in many settings, but sequencing accuracy is a problem with these technologies. The per-base sequencing accuracy has been estimated to be between 85% and 92% for PacBio sequencers and 87% to 98% for ONT machines56,61,62. This accuracy is much lower than either Sanger sequencing or Illumina reads. Characterizing, correcting and accounting for these errors is widely studied and it will be discussed in more detail in Sections 1.3 and 1.4.

technology | read length (nt) | throughput (nt/hour) | cost ($/Mb) | accuracy |

Sanger | 1000 | 120 103 | $500 | 99.999% |

Illumina | 150 | 2.5-12.5 109 | $0.07 | 99.9% |

Pyrosequencing | 400 | 30 106 | $10 | 99.9% |

PacBio SMRT | 10000 (up to 60000) | 2-11 109 | $0.32 | 85-92% |

Nanopore | 12000 (up to 2.5 106) | 12.5-260 109 | $0.13 | 87-98% |

While most of the mentioned technologies can also be adapted and used to sequence RNA instead of DNA63,64, directly sequencing proteins remains a challenge. The sequence of amino acids making up a protein is usually deduced from the codons in sequenced DNA or RNA after detection of potentially coding regions called open reading frames (ORFs). Development of methods to directly sequence protein molecules using mass spectrometry was started not very long after Sanger sequencing65 and improved66. New methods are still being developed67 but protein sequencing remains a challenge.

1.3 Sequencing errors, how to account for them ?

Sequencing technologies are not perfect. They make mistakes, as we can see from the accuracy rates reported in Section 1.2. For technologies based on nucleic acid synthesis (i.e. everything except ONT), since they use polymerases it stands to reason that the same three types of errors, described in Section 1.1.2.2, occur: substitutions, insertions and deletions. For long read technologies though, most of the errors do not come from the polymerase, but from signal processing used to deduce the sequence. Since both technologies execute single molecule sequencing, the signal to noise ratio is low68,69 making base calling more complicated.

This explains the discrepancy in error rates between short and long read sequencing technologies: the former getting as low as 10-4 or 10-5 after computational processing70 where the latter are between 10% and 15%. This high error rate long reads is bothersome and many efforts have been made to lower this error rate, computationally and technologically.

1.3.1 Error correction methods

The long read error-correction literature and toolset is rich and active71–73. There are two main ways to correct errors: 1) hybrid methods where high-accuracy short reads are used, and 2) non-hybrid methods where only the long-reads are used.

In Non-hybrid methods71,74, by finding regions that overlap fairly well between reads and taking the consensus of the overlapped regions (i.e. the majority nucleotide at each position), some errors can be eliminated. In many analyses and sequencing data processing pipelines, the first step is to break up the reads into all possible overlapping subsequences of length \(k\) called \(k\)-mers (e.g the 3-mers of ATTGC are ATT, TTG and TGC). Rare \(k\)-mers in the read dataset, i.e. \(k\)-mers that appear only a handful of times in all the reads, are likely the result of an error and filtering them out can improve analysis. One or both of these procedures are implemented in several pieces of commonly used software such as assembler like wtdbg275, and canu76 or standalone long-read correctors like daccord77. In some cases, errors are corrected not on the raw reads but after having assembled the long reads into long continuous sequences (contigs), this process is called polishing. The ntEdit polisher78 also filters out rare \(k\)-mers to correct errors. The Arrow79 and Nanopolish80 polishers correct the assembly using the raw PacBio and ONT long reads respectively, and Racon81 can use bot types of long-reads to polish assemblies.

Hybrid methods, as their name suggest, make use of short reads to correct errors in long reads. By finding similar regions between the short and long reads we can use the higher accuracy of short reads to correct the long ones. This is implemented in many pieces of software proovread82, Jabba83, PBcR84 or LoRDEC85. Short reads can also be used to polish long read assemblies with tools like Pilon86. The first complete human genome was assembled and polished using many different sequencing technologies including PacBio, ONT and Illumina technologies4.

1.3.2 More accurate sequencing methods

While a lot of effort is being put into error correction, another angle of attack to lower the error rate of long reads is to improve the sequencing technology.

In 2019, PacBio introduced HiFi reads, based on a circular consensus (CCS) technique87. During SRMT sequencing the 2 strands are linked together by bell adapters to form a circular DNA template (c.f. Section 1.2.3), the central idea of CCS is to sequence this molecule multiple times by going over the circle more than once. In the resulting long sequence the known bell adapter sequences can be removed, and a consensus sequence can be built from the multiple passes over the same DNA template. This results in long-read accuracies of 99.8% to 99.9%56,87. This works because PacBio sequencing errors are mostly randomly distributed along the sequenced template (more on that in Section 1.4.2). Therefore it is unlikely that the same error will appear in multiple passes over the same template portion.

For ONT sequencing, most improvement efforts have been focused on base-callers. These tools were originally based on Hidden Markov Models88 (HMMs), but gradually they have been shifting over to neural network based deep learning methods53,74,89,90 with faster inference times and better performance.

Similarly to PacBio HiFi reads, ONT developed 2D, and 1D2 sequencing. In 2D sequencing, both strands of the DNA molecule to sequence are linked with a hairpin adapter to form one long sequence. Each strand is sequenced once and a consensus is built from these 2 passes91. 1D2 sequencing operates in a similar fashion but without the need for a hairpin adapter92. 2D sequencing produces reads with 97% accuracy albeit much shorter than standard 1D sequencing91. Recently, Oxford Nanopore Technologies announced the release of a new technology they call duplex. Using new chemistry, a new basecaller and sequencing of both strands (similarly to 2D and 1D2) they announce raw read accuracies of 99.3%93. Pre-printed research seems to confirm these numbers with one experiment yielding duplex reads with a 99.9% accuracy94.

A technologically agnostic method using unique molecular identifiers added during the template preparation phase, and consensus sequencing has been shown, in specific contexts, to improve the accuracies of both ONT and PacBio CCS long reads to 99.59% and 99.93% respectively95.

Finally, new sequencing technologies are being developed, like built in error-correction short-read technologies yielding error-free reads of up to 200 nucleotides long96. Illumina also recently announced its own high-throughput, high-accuracy long-read sequencing technology in 202297, although details about the performance and technology are scarce.

1.4 The special case of homopolymers

Despite improvement in error correction methods and sequencing technologies, certain genetic patterns are particularly difficult to process, homopolymers are one such pattern.

1.4.1 Homopolymers and the human genome

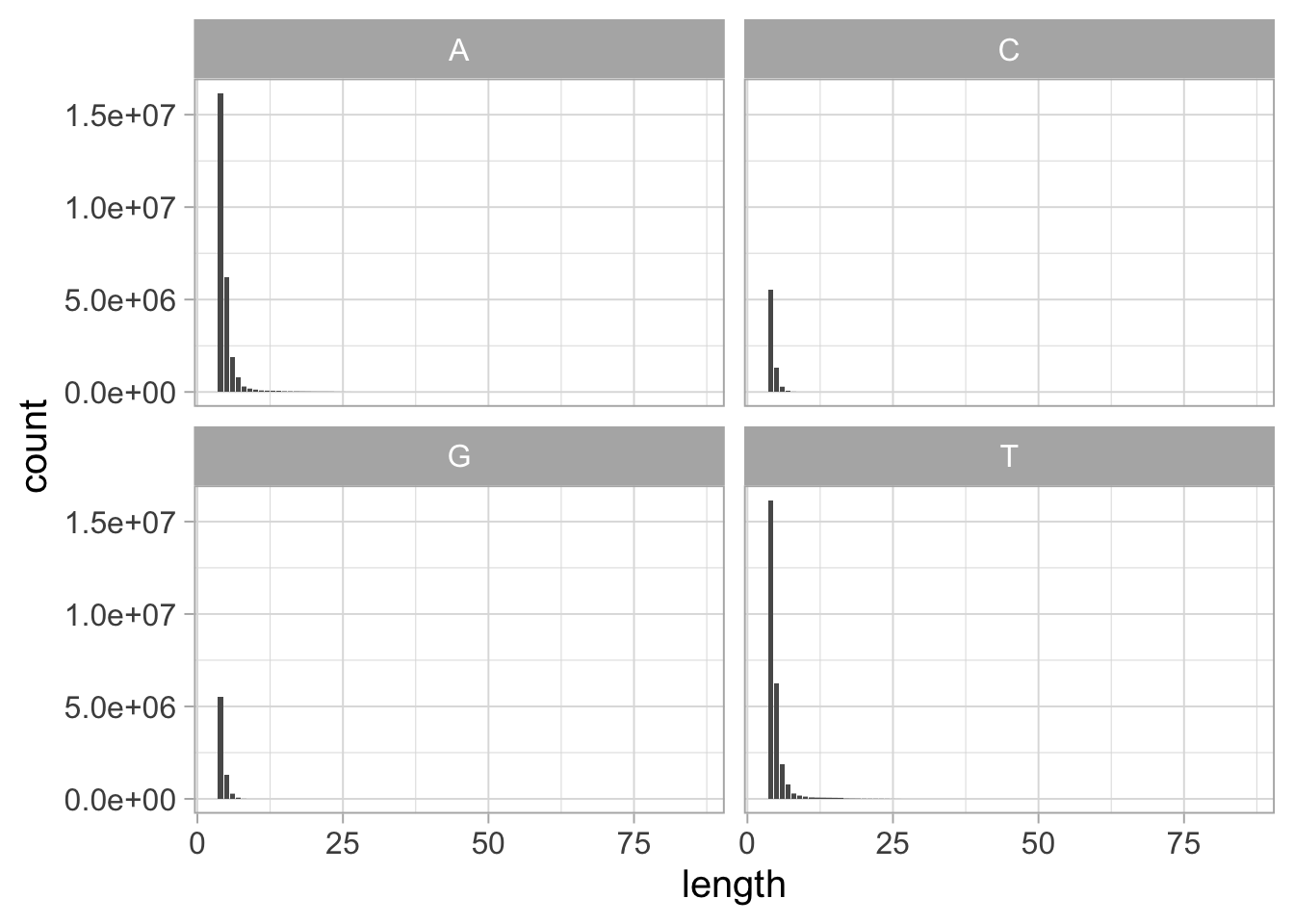

Homopolymers consist of a stretch of repeated nucleotides (i.e. \(\geq 2\)) occurring at some point in the genome. For example, the sequence AAAA is a length 4 adenine homopolymer. In the complete human genome assembly (CHM13 v1.1 from the T2T consortium4), 50% of its three gigabases are in homopolymers of size 2 or more, and 10% are in homopolymers of length equal or greater than 4. As can be seen in Figure 1.4, short and medium length homopolymers make up a significant part of the genome. In a previous GRCh38 human genome assembly, more than 1.9 megabases are in homopolymers of length 8 or higher98, representing about 1‰ of that assembly. The longest homopolymer run in the CHM13 v1.1 assembly is 86 (90 in GRCh3898).

![**Homopolymer fraction of the whole human genome by homopolymer length.**

The homopolymer counts were calculated from the T2T consortium full human genome assembly CHM13 v1.1. This figure was inspired by Figure 3b of reference [@booeshaghiPseudoalignmentFacilitatesAssignment2022].](_main_files/figure-html/HPpercent-1.png)

Figure 1.4: Homopolymer fraction of the whole human genome by homopolymer length.

The homopolymer counts were calculated from the T2T consortium full human genome assembly CHM13 v1.1. This figure was inspired by Figure 3b of reference98.

In the human genome, homopolymers tend to occur more often in adenine and thymine runs than guanine and cytosine. There are are approximately twice as many nucleotides within A or T homopolymers (481 Mb and 484 Mb) than G or C (278 Mb and 279 Mb). This discrepancy is even more pronounced when looking at homopolymers longer than four nucleotides (c.f. Figure 1.5).

Figure 1.5: Distribution of homopolymer lengths per base in the human genome, for homopolymers of length \(\geq\) 4.

The homopolymer counts were calculated from the T2T consortium full human genome assembly CHM13 v1.1.

1.4.2 Homopolymers and long reads

Unfortunately, homopolymers are a source of errors in sequencing, particularly for long-read technologies. While substitutions seem to be randomly distributed along the reads for PacBio and ONT, the main error mode seems to be indels in homopolymeric sections, i.e. reading the same nucleotide several times or skipping over one of the repeated nucleotides. Many studies show that homopolymeric indels are the main type of error for PacBIO SMRT and ONT long-read sequencing68,99–101. This is even the case for PacBio HiFi reads, while the circular consensus approach eliminates the randomly distributed substitutions but homopolymer indels remain87. It seems that ONT reads are more prone to this type of error than PacBIo56. The rate of these errors is independent of the length of the homopolymer for ONT, but it rises with homopolymer length for short-read and PacBio technologies102.

1.4.3 Accounting for homopolymers

The fact that they make up a significant part of the human genome, and that they are a source of errors for long read technologies means that homopolymers warrant special attention and care. Methods have been devised and implemented, specifically to counter homopolymer-linked errors.

1.4.3.1 Specific error correction

Homopolymer errors are taken under special consideration during assembly polishing when using certain tools like HomoPolish103, NanoPolish80 or Pilon86. Methods to improve base calling of homopolymer stretches have been developed for nanopore sequencing104,105, and implemented in state of the art base-callers such as guppy or scrappie53.

Steps before sequencing can also be taken in order to reduce the effect of these errors, like avoiding homopolymers in barcode sequences106,107 or during the development of DNA based storage systems108.

Improving the sequencing technologies can also be a solution, by reducing the number of homopolymer errors straight from the source. The latest ONT chemistry R.10 reportedly improves accuracy in homopolymer rich regions74,109. Non-biological solid-state nanopores also reduces errors in homopolymers110,111.

1.4.3.2 Homopolymer compression, a nifty trick

Homopolymer-errors can be harmful for downstream analyses such as read-mapping (c.f. Chapter 3). However, in many cases, reads cannot be re-sequenced with newer technologies, or base-called with better base callers. Only the read sequences potentially containing homopolymer errors, are available for usage. In order to account for this sort of error, a simple pre-processing trick was developed: homopolymer compression (HPC).

The idea is very simple: for any sequence, replace a repeated run of any nucleotide (i.e. homopolymers) by a single occurrence of that nucleotide. This means that after going through HPC the sequence AAACTGGG will yield the sequence ACTG. This simple pre-processing step, applied to all the reads and sequences to analyze, removes all indels in homopolymers, and can resolve some ambiguities (c.f. Figure 1.6). It can also remove legitimate information contained in homopolymers, but the trade-off with the reduced error rate has been deemed advantageous.

HPC has been implemented in many sequence bioinformatics software tools. The HiCanu112, MDBG113, wtdbg275, shasta114 assemblers all use HPC under the hood to provide better assemblies, and HPC was used to assemble the complete human genome sequence4. The first published usage of HPC, was actually in the CABOG assembler115 developed for pyrosequencing reads. HPC has also been implemented for other tasks, like clustering116, long read error correction with LSC117 and LSCPlus118, alignment with minimap2119 and winnowmap2120, or specific analysis pipelines for satellite tandem repeats121.

![**Homopolymer compression can help resolve ambiguities due to sequencing errors.**

A read with homopolymer related sequencing errors can be homologous to two different regions of the reference genome, with one discrepancy for each region. After applying HPC, this ambiguity is properly accounted for and the read is homologous to only one region. This figure, however, only shows one way homopolymers can be detrimental and others are possible^[Homopolymer indels can be harmful in opposite circumstances as well. Let us consider, for example, a read that should correspond to several repetitions of a conserved motif. Homopolymer indels can artificially resolve an ambiguity by making the read unique and prefer a specific repetition of the motif or entirely misplace the read.].](figures/Sequence-Intro/Hpc.png)

Figure 1.6: Homopolymer compression can help resolve ambiguities due to sequencing errors.

A read with homopolymer related sequencing errors can be homologous to two different regions of the reference genome, with one discrepancy for each region. After applying HPC, this ambiguity is properly accounted for and the read is homologous to only one region. This figure, however, only shows one way homopolymers can be detrimental and others are possible3.

1.5 Conclusion

I hope, after reading this chapter, you will agree with me that sequencing is fundamental for furthering our knowledge of biological processes, organisms and Life in general. And as such, the sequencing field is still very active with new technologies being developed to improve the current technologies in various aspects. Illumina promises high accuracy long reads with Infinity97 and PacBio is developing its own short read sequencing technology, moving away from sequencing by synthesis122,123. Finally, efforts are also being made to make sequencing more affordable and available in a greater number settings with Ultima genomics promising accurate short reads for as low as $1 per gigabase124.

With all these technological improvements we are approaching an era where sequencing is easy and quick, opening the door for massive projects like Tara Oceans125 or the BioGenome project126 to better understand biodiversity. Routine whole-genome sequencing could also usher in an era personalized medicine127.

Despite all these advancements, sequencing errors remain an obstacle to certain analyses. This is particularly true for the ever more used and useful long reads, and the important fraction of genomes made up of homopolymers. Detecting, removing or accounting for these errors in some way is a crucial step to improve any analysis based on sequencing data, and to make sure that no theory or conclusion are built upon erroneous sequence data.

Finally, it is important to note (at least for the remainder of this thesis) that, from a computational standpoint, a biological sequence is simply a succession of letters and a set of reads is simply a text file. Therefore, many analyses and data processing methods are inspired or directly transposed from the field of text algorithmics.

References

Homopolymer indels can be harmful in opposite circumstances as well. Let us consider, for example, a read that should correspond to several repetitions of a conserved motif. Homopolymer indels can artificially resolve an ambiguity by making the read unique and prefer a specific repetition of the motif or entirely misplace the read.↩︎