Chapter 4 Learning From Sequences and Alignments

Sequences and sequence alignments are very rich sources of information. As was stated in Chapters 2 and 3, many downstream analyses rely on sequence alignments.

In whole genome assembly, where sequencing reads are combined together to deduce the genome sequence, pairwise sequence alignment is used, both in reference-based319,320 and de novo321,322 assembly. It has also been used to deduce protein function323. Pairwise alignment, has been used for sequence clustering116 as well as detecting genetic324 and structural variants325,326. Multiple sequence alignments are also very widely used, mainly in phylogenetic analyses where the evolutionary history of a set of sequences are studied and represented as trees327,328, but they have also been used extensively in protein structure prediction329.

More recently, as computational power and datasets have grown, more and more machine learning methods are being used on sequence alignments in order to gain biological insight. In this chapter, we will explore how this can be done, as an introduction to Chapter 6 where we present an application: predicting HIV drug resistance mutations.

4.1 What to learn ?

One of the first questions one might ask themselves when wishing to use machine learning with sequence data is “what can I learn?”. A simplistic answer to this question would be “a lot of things” as the following section will strive to show. To choose what we learn we must first choose a learning paradigm.

4.1.1 Supervised learning from biological sequences

Supervised learning is one of the main machine learning paradigms, where we have data that consists of a collection of input and output pairs (e.g. a DNA sequence and an associated species). By feeding these pairs to our algorithm of choice, it will learn to predict the output based on the input alone. This is a very powerful way of learning something interesting. We can consider the link between inputs and outputs as extra knowledge that the dataset creator or curator can infuse in the learning algorithm. Within the supervised learning paradigm there are two possible tasks: regression and classification.

4.1.1.1 Regression tasks

For regression tasks, the outputs of our input-output pairs are encoded by a continuous numerical value. Regression models will therefore output continuous real values. Fortunately, many interesting continuous values can be computed from aligned sequences, and in many cases, machine learning models can be trained to predict these variables.

Regression methods have been used to predict drug response in cancer patients330 and resistance levels to drugs in HIV331. These methods are also extensively used in protein structure prediction where they are trained to predict residue angles or values in protein contact maps from aligned sequences332–336, or directly from an MSA137. Regression algorithms have been used to predict protein fitness in silico337–339 to speed up protein engineering, and make some processes like drug development faster and cheaper. They have also been used in many other tasks such as predicting gene expression levels340 or predicting multiple sequence alignment scores341.

In many cases these methods use an encoded representation of the sequences (c.f. Section 4.3) as input, but some represent the inputs as values computed from alignments. For example, protein structure can be predicted from contact maps342 derived from MSAs, and gene expression levels can be predicted from lists of mutations that are obtained through alignment to a reference sequence340. This last approach is also used in Chapter 6 to predict drug resistance in HIV.

4.1.1.2 Classification tasks

For classification tasks, the outputs of our input-output pairs are categorical in nature and often represented as discrete integer values. Originally, most classification methods were designed for binary classification with only two possible outputs: a “positive” and a “negative” class. This is a simpler problem to solve than multiclass classification problems where more than two outputs are possible. Most methods that can handle binary classification have been adapted to multiclass classification.

In biology, categorizing and classifying is often at the root of several research problems. As such, machine learning classifiers have obvious applications and have been widely used with sequence data as inputs. Classifiers have been used to predict if a particular virus343,344 (also Chapter 6), or bacteria345,346 is resistant to antiviral or antimicrobial drugs respectively. Some classifier models have also been used to predict characteristics at positions in a sequence, like methylation site prediction347 or splicing site detection348. This type of approach has also been applied for sequence labeling tasks, where each token of a sequence (amino acids, codons, …), is assigned a label using classifiers. This has been applied to protein secondary structure prediction349 where each residue is assigned a label (\(\alpha\)-helix or \(\beta\)-sheet), or gene prediction where stretches of the sequence are classified as part of a gene or not350,351. Base calling for Nanopore sequencing data (mentioned in 1.2.3), is also a sequence labeling task, although the sequence is made up of voltages and the assigned labels are nucleotides. Finally, classifiers have also been used to predict more general characteristics of a given sequence, like the cellular localization352 and putative function353 of proteins, or the cellular localization of gene expression data354.

I have presented here, only a fraction of what is possible to learn from sequences in the supervised learning paradigm. I hope you will agree with me that there is no shortage of problems in computational biology that are suited to this sort of approach. By using machine learning, instead of more formal statistical approaches, there is a lower amount of upfront assumptions and the algorithm is tasked with figuring out what features of the data are important or not for the task at hand.

4.1.2 Unsupervised learning from biological sequences

The second main machine learning paradigm is called, by contrast to supervised learning, unsupervised learning. In this paradigm we do not have input-output pairs but only inputs. The goal of unsupervised machine learning methods is to extract some structure or patterns from the given input without additional guidance.

One of the main tasks in the unsupervised learning paradigm is clustering, wherein similar inputs are grouped together, methods like \(k\)-means or hierarchical clustering355 often use some type of distance metric between inputs to define clusters of similar inputs. Clustering can be used for classification tasks, indeed if some characteristics of sequences in a given cluster are known then we can make the assumptions that sequences in the same cluster will be similar and share these characteristics. This has been used to group proteins in families356. Clustering methods can also be used to remove duplicate or near-duplicate sequences in datasets357. Phylogenetic trees can be considered as a specific type of clustering methods, and they have been used to group biological sequences358.

One of the main obstacles to clustering biological sequences is the need for computing distances between sequences. As stated in Chapter 2, obtaining a biologically relevant distance metric between two sequences, such as the edit-distance, is no easy task. Additionally, in many cases, all pairwise distances are needed for clustering, meaning at least a quadratic time and space complexity for a naive clustering algorithm. Two approaches can be used to resolve this problem: devise methods that do not need all pairwise distances359, or find a way to speed up distance computation. Some methods have been developed to devise distance metrics that are biologically relevant and less expensive to compute than the edit-distance: like the hashing based MASH360 or dashing361, or the neural network based NeuroSEED362.

Unsupervised learning can also be used without clustering. For example, unsupervised methods based on maximum likelihood approaches have been used to predict mutational effects in protein sequence363 as well as predict recombination hotspots in human genomic sequences364.

In many cases, unsupervised learning can be done as a preliminary dimensionality reduction step to a supervised learning task. Indeed biological data is often high-dimensional, and it is often useful to lower the amount of dimensions to speed up computations. Some unsupervised methods can reduce the number of dimensions while retaining most of the information. One such method, Principal Component Analysis (PCA), is widely used. PCA has been applied to distance matrices to compute phylogenetic trees365, and work has been done to apply PCA directly to MSAs without needing to go through a distance matrix366. PCA is also widely used in clustering applications367–370.

4.1.3 Others paradigms

More recently, other learning paradigms have gained popularity in machine learning circles. Within the semi-supervised paradigm, a small amount of labelled data (i.e. input-output pairs) is included in a large un-labelled dataset, and methods can leverage both. This approach has been used to predict drug to protein interactions371 and predict the secondary structure of specific transmembrane proteins372.

In the self-supervised paradigm, models are first trained on a proxy task that hopefully makes use of important information in the data. Through this pre-training step, self-supervised models extract important information from the data and create internal features and models that can then be leveraged in a supervised or unsupervised fine-tuning task. This paradigm has exploded lately within the field of natural language processing and machine translation with the rise of transformers, but has also been widely used to create protein language models like ProtBert373 and extract information from disordered protein regions374. We will look at self-supervised learning in a little more detail in Chapter 7.

Finally, the end-to-end learning paradigm designates the process of chaining several machine learning tasks together and optimizing the algorithms simultaneously using the error from the loss of the last task of the group. This has been successfully used to predict protein-protein interaction surfaces in three dimensions375 as well as predict micro-RNA targets sequences376. This paradigm can also be used in a task-based fashion, where a differentiable loss function is crafted on a traditionally non-machine learning task and used to train preceding models. This has been explored for sequence alignment and is further detailed in Chapter 7

4.2 How to learn ?

Machine learning regroups a multitude of techniques and methods to extract knowledge and make data-driven predictions. In this section, we will quickly go over some of the main supervised-learning methods, and go into more detail for techniques used in Chapter 6: logistic regression, naive Bayes and random forests.

4.2.1 General setting

Supervised machine learning is an optimization process. A given algorithm, which I will refer to as a model, has an associated loss function that can be evaluated on a dataset. This loss represents how well the model is predicting outputs from inputs on known input-output pairs. Through an iterative process, this loss is optimized (in our case minimized) over all pairs forming a dataset. Often, in the literature, loss and cost are used interchangeably377. I will favor loss in the following sections.

There is no shortage of loss functions378, some of them are specifically crafted for a given model while some are widely used in regression tasks like the Root Mean Square Error (RMSE). Others like the cross-entropy loss are used in classification tasks.

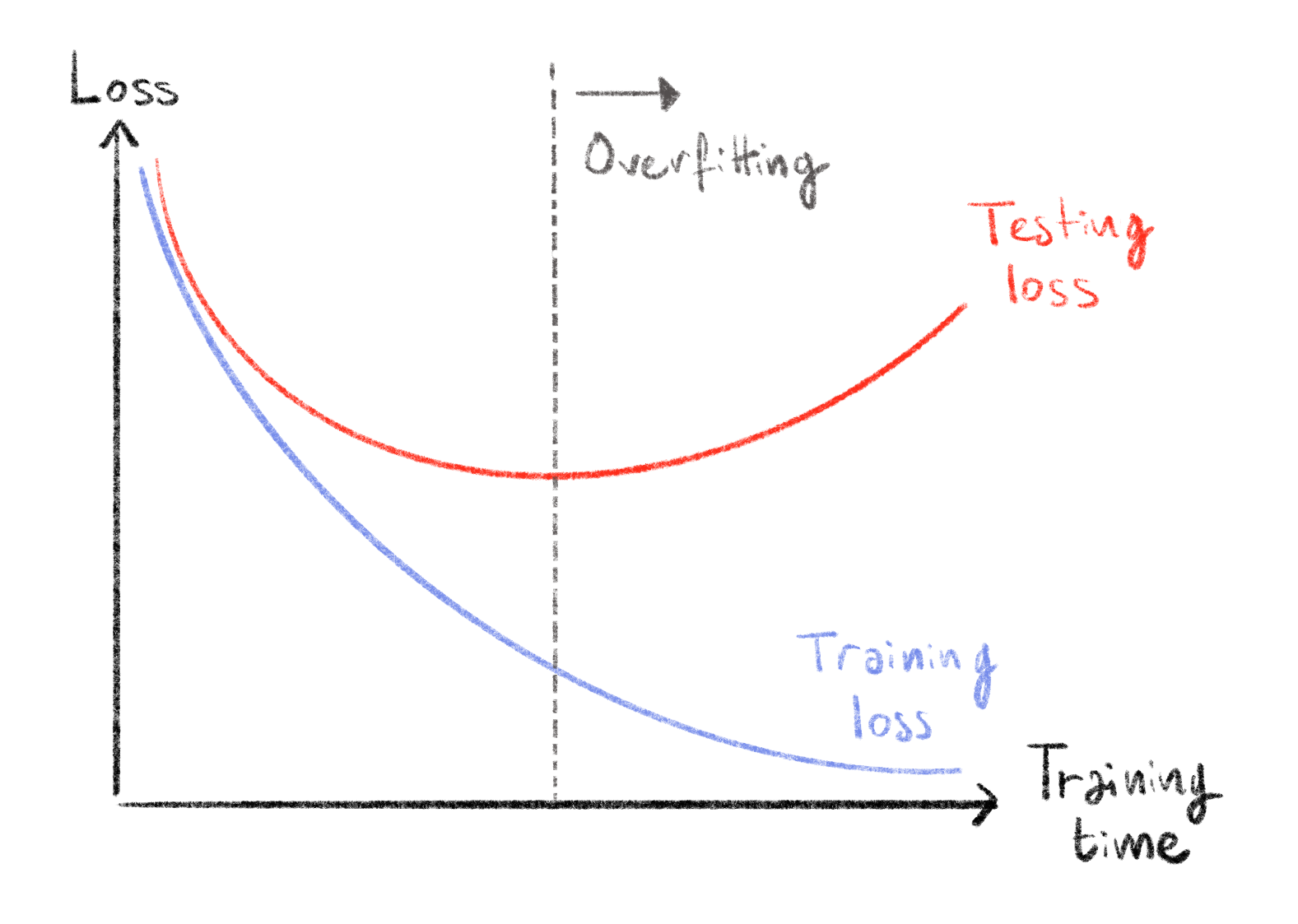

After training a machine learning model on a dataset, it is often important to compute a performance measure to get an idea of how well this model is performing. We could do this on the same data on which the model was trained, this would however be wrong. Indeed, it gives an unfair advantage to the model since it predicts outputs from examples it has already seen. Furthermore, it gives us no insight into the generalizability of the model since it could just learn the dataset by heart, getting a perfect score on it while being completely useless on new unseen data. This situation is known as overfitting355, shown in Figure 4.1. Since being able to predict outcomes on unseen data is the main goal of a machine learning model, we need another way of measuring model performance. The way machine learning practitioners can measure the performance of their model in a more unbiased manner is by separating the dataset into two parts before even starting to train the model: one part (usually the majority of the data) is used as the training set, and the other as the testing set. Logically, the training set is used to train the model while the testing set is used to evaluate the performance of the model after training.

Figure 4.1: Overfitting behaviour in loss functions.

The two curves show how the loss calculated on the training set (blue) and the testing set (red) evolve as training time increases. At first both decrease showing that the model learns informative and generalizable features. At some point, training loss keeps decreasing and testing loss increases, meaning that the model is learning over-specific features on the training set and is no longer generalizable: it is overfitting.

As there is a multitude of loss functions, there are many performance metrics to assess how the model is doing on the testing data, especially for classification tasks379. For regression, RMSE is also widely used as a performance metric, along with the Mean Absolute Error (MAE). For classification, accuracy is the most widely used performance metric. Accuracy is the ratio of the number of correctly classified examples divided by the total number of examples. Accuracy has also been adapted to specific settings like unbalanced data where the different possible output classes are not represented equally380. The testing set must stay completely separate from the training set and decisions about model settings or input features used must be made without help of the testing data. If these stringent conditions are not respected this can lead to data leakage and artificially increase performance of the model on the testing data, giving us a biased view of the model’s performance and generalizability381. This leaking of testing data into the training process is a common pitfall of machine learning382. To remedy to this problem, a separate dataset is often reserved and used as a validation set, in order to provide some estimation of model performance without using the testing set.

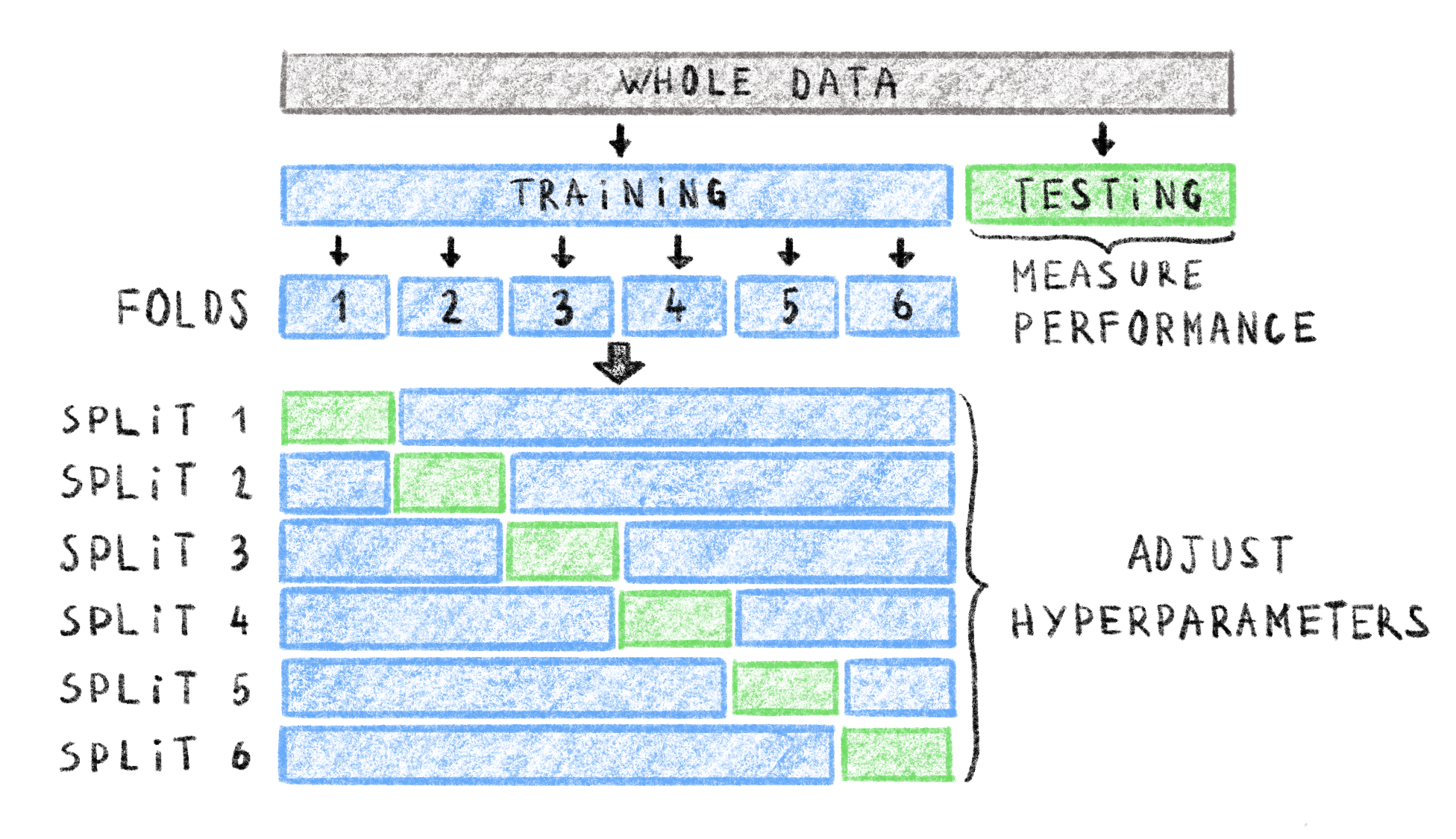

In many cases, machine learning models have a number of parameters that guide model behavior. These parameters are chosen before training and are different from the internal parameters of the model that are optimized during training. As such, they are often called hyper-parameters. These could, for example, be the number of levels in a decision tree, a learning rate, or a stopping threshold. The value of these hyper-parameters is often very influential on model performance. However, setting hyper-parameter values based on the model’s test set performance would lead to data leakage as stated earlier, and using a separate validation set can lead to small training sets. To still be able to tune hyper-parameters for optimal performance, and keep a large training set, \(k\)-fold cross-validation is used355. In this setting, shown in Figure 4.2, the testing set is set aside before model training and reserved for the final model performance evaluation. The training set is then further subdivided into \(k\) equally-sized subsets, called folds. Each of the \(k\) folds is then used to create a what is called a validation split: the fold acting as a within-split testing set and the rest of the general training set is used as the within-split training set. This results in \(k\) pairs of disjoint training and testing sets, and each example of the general training data is used exactly once in a within-split testing set. An idea of the model performance can be obtained by measuring performance on the within-split testing sets and averaging the measures. This cross-validation performance can be used to inform hyper-parameter value choice without using the reserved testing set and avoiding data leakage.

Figure 4.2: Example of data splits into training, testing and validation sets with 6-fold cross-validation.

In this setting, the whole data set is first split into a training and testing set. The testing set is kept separate to assess final model performance. The training set is split into 6 folds resulting in 6 splits. In each split of the training set, the correspoding fold is used as the within-split test set (green), and the rest of the training set is used as the within-split training set (blue). You can get an idea of the model performance by averaging measures on within-split testing sets and adjusting hyper-parameters accordingly, without using the global, reserved testing set. Adapted from https://scikit-learn.org/stable/modules/cross_validation.html

This is the general setting in which a lot of the supervised learning approaches in computational biology reside, e.g. cross-validation was used to tune hyper-parameters for the models in Chapter 6.

4.2.2 Tests and statistical learning

Some of the simplest models possible are derived from statistics and based on probabilities. One such way to classify data is with a statistical test, like Fisher’s exact test383 or a \(\chi^2\) test384, depending on the number of training examples. If one of the input variables is significantly related to the output then one can make a crude prediction on the output based solely on the value of one input variable. By testing several features and predicting the output from a set of significantly related input variables (e.g. through a vote), then prediction accuracy can be improved. This approach is used as a baseline in the study presented in Chapter 6. It is, however, not very sophisticated and does not have the best predictive power.

A model that fits more squarely in the process of supervised learning described above is linear regression. This regression model assumes that the output value results from a linear combination of the input features and an intercept value. The coefficients of this linear combination and the intercept are the parameters that the models optimizes during the learning process. Often, the loss function used to fit this model is the RMSE mentioned above. The gradient of the RMSE w.r.t. all the coefficients of the model is easily derived and can be used for optimization. Since this model is very simple there is an exact analytical solution to find the minimum gradient value355. However, in some cases a gradient descent approach can be beneficial to train this model. This model has also been adapted to binary classification, by considering that the output value results from a linear combination of input models, passed through a logistic function. The resulting model is called logistic regression, and is one of the classifiers used in Chapter 6. Equations (4.1) and (4.2) show the mathematical model of linear and logistic regression respectively. In these equations, \(\hat{y}^{(i)}\) represents the predicted output of the ith example and \(x_j^{(i)}\) the jth variable of the ith example input. \(\theta_0\) is the intercept and \(\theta_j\) the coefficient corresponding to the jth input variable.

\[\begin{equation} \hat{y}^{(i)} = \theta_0 + \sum_{j=1}^k \theta_j \cdot x_j^{(i)} \tag{4.1} \end{equation}\] \[\begin{equation} \hat{y}^{(i)} = \frac{1}{ 1 + e^{ -(\theta_0 + \sum_{j=1}^k \theta_j \cdot x_j^{(i)}) } } \tag{4.2} \end{equation}\]The model in Equation (4.1) outputs a continuous value used in regression, and the model in Equation (4.2) outputs a continuous value bounded between 0 and 1, that we can consider a probability of being in one of the classes. With this probability it is easy to classify a given example in one of the two classes. It is easy to extend the logistic regression model to multiclass classification, by training several models and predicting the class with the maximal probability.

These linear models are simple, but can achieve good performance. They can, however, be prone to overfitting. This often translates into very large values for the \(\theta\) coefficients. In order to counter this, regularized versions of linear and logistic regression were introduced by adding the weights to the loss function in some way. By adding the coefficient values to the loss they are kept small through the optimization process, reducing the risk of overfitting. The two main regularization strategies are the ridge385 and Lasso386 penalties.

The final supervised model I will present in this section is the Naive Bayes classifier. As its name indicates, it is based on Bayes’ theorem of conditional probabilities. By making a strong assumption, that all variables of the input examples are mutually independent, we can derive the probability of the ith input example belonging to class \(C_{\alpha}\) as:

\[\begin{equation} p(C_{\alpha} | x^{(i)}_1, \ldots, x^{(i)}_k) = Z \cdot p(C_{\alpha}) \prod_{j=1}^k p(x^{(i)}_j | C_{\alpha}) \end{equation}\]With \(Z\) a constant that can be computed from the training data. Therefore it is very easy to use this to build a classifier by computing the probabilities of an example belonging to a class for all possible classes in the training data and assign the one with the maximal probability. In practice this is a very flexible model, since any probability distribution can be used for each feature and class. The parameters of these distributions can be learned with a maximum likelihood approach for example. This model builds upon the naive assumption (hence the name) that all input variables are mutually independent. This assumption is very often violated, especially in biological sequence data where independence is not at all guaranteed by the evolutionary process. This model is, however, quite robust to this, and stays performant despite the violations of this assumption387,388.

4.2.3 More complex methods

While these simple methods are quite useful in many settings, more complex methods were developed. One of the most popular methods, up until fairly recently, were Support Vector Machines (SVM). This classifier was first developed in 1982389 and it functions by finding the optimal separation hyperplane between 2 classes, i.e. a linear boundary in high-dimensional space between training examples of two classes. What made it so popular is when it was associated with the so-called kernel trick390,391. With the kernel trick, training examples that cannot be linearly separated can be cheaply projected into a higher dimensional space, where linear separation is possible. This made SVMs very powerful and popular, and it was quickly adapted for regression tasks as well392. The main model that will interest us in this section however is not the SVM.

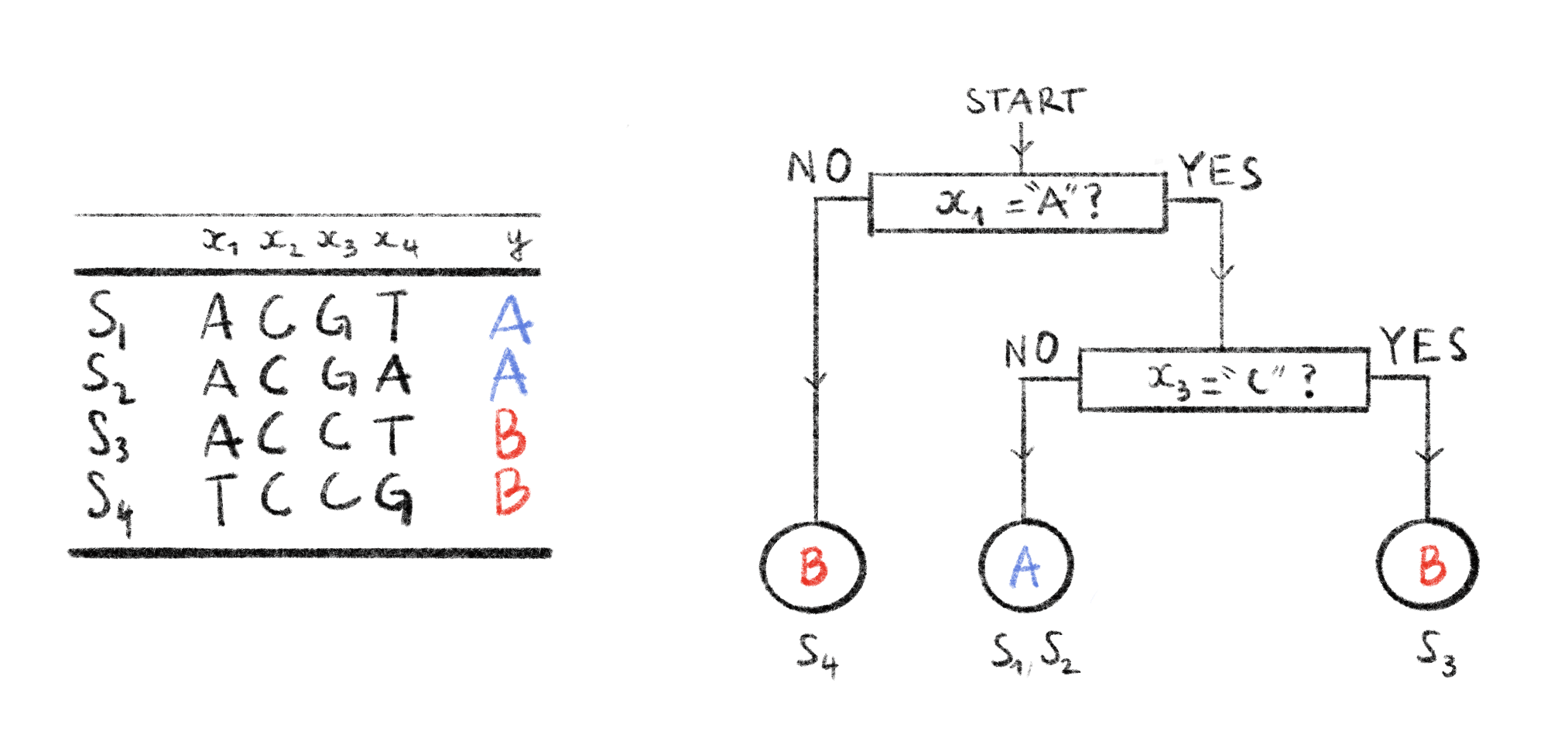

Random forests are another very popular model used for both classification and regression. As it is used in Chapter 6, we will go over it in more detail. Developed in the early 2000’s393, it builds upon previous work: Classification And Regression Trees (CART)394. CART decision trees are very useful for supervised learning tasks. To use CART trees, start at the root and at each node there is a condition on a single input feature. This condition decides if the considered example goes to the right child or the left child. By traversing this tree, choosing the path through the conditions at all the nodes met by the example, we can assign the example to one of the leaves, corresponding to a class or a predicted value. An example of such a tree is given in Figure 4.3.

Figure 4.3: Example of a decision tree on DNA data.

Here we have a dataset of 4 DNA sequences \(S_1, \ldots, S_4\). Each sequence has 4 input variables \(x_1,\ldots,x_4\), and an output variable \(y\) indicating the strain of a sequence. Each sequence can be classified by the decision tree on the right by following a path, from root to leaf, according to the conditions in internal nodes. The predicted strains are shown in the leaves. Sequences that end up in a given leaf node are indicated underneath that leaf node. This tree can classify these 4 sequences perfectly.

It is actually quite simple to build CART trees, the whole methods lies upon the principle of minimizing impurity (or maximizing purity) on a given input variable in child nodes. Impurity can be defined in many ways355: for regression it is often the Residual Sum of Squares (RSS), for classification it is often the Gini index or an entropy measure. Regardless of the chosen metric, a high impurity denotes a heterogeneous collection of examples and a low impurity indicates a homogeneous set of examples. When building the tree, recursively from the root, we find the condition, and the input variable on which the condition relies, by looking at all possible splits and choosing the one that decreases impurity the most in the child nodes. This process is continued recursively until the leaves are completely pure (likely resulting in overfitting) or until a certain stopping condition is met (e.g. purity threshold, maximum depth, …). To avoid overfitting, trees can also be pruned after the building phase. CART trees have the distinct advantage of being interpretable: it is easy to figure out why an input has been assigned to a certain class, which can be very useful in biology or medicine395.

Despite these good properties, it is easy to overfit with decision trees, and small changes to the training data can induce large changes in the resulting tree355,395, hurting interpretability. This is why the Random Forest (RF) model was created. RFs are essentially an ensemble of decision trees, a forest, built from the training data. To build one of the decision trees in a random forest, the training data is first bootstrapped: a new training set is sampled with replacement from the original training data with the same number of examples. This process is called bagging for “bootstrap aggregating”. With this procedure, each decision tree is built from slightly different training data and will therefore likely be slightly different. An additional step to ensure some variability between the trees is in the choice of the splitting condition at each tree node. Where, in CART trees, all input variables are considered to find the optimal split, in RF trees, only a random subset of the input variables are considered at each node. This results in a set of decision trees that are all trained from slightly different data, with slightly different features at each node but that all have the same task on the training data. We can get a prediction from all these trees, by taking the majority predicted class for classification trees, or the average of predictions for regression trees.

All these measures to reduce the variance linked to decision trees, and to yield more generalizable models, make random forests very popular. They are often very competitive and often have better performance than the models presented above396,397. Furthermore, by only considering a subset of features at each tree node, RF often deals better with high-dimensional data than other methods397. Further refinements to the algorithm such as boosting, where misclassified examples are more likely to be selected in the bagging training sets have been very useful as well.

Deep learning has been used more frequently and more broadly to get good results across a large number of tasks. This is also true in biological contexts. However, Chapter 6 does not make use of deep learning methods so they will no be discussed here. A short introduction to deep learning will be presented in Chapter 7.

4.3 Pre-processing the alignment for machine learning

By now you will surely have noticed that all the models I presented above (with the exception of RFs) need to be trained on a collection of numerical variables, i.e. numerical vectors. Biological sequences, however, are not vectors of numbers. We therefore need to transform our sequences of letters into numerical sequences that we can feed to the machine learning model in this digestible form. Most supervised machine learning algorithms expect a matrix as input, where the rows are individual training examples and the columns numerical variables. A vector where each entry corresponds to an expected output value is used during training. In this section, I will present a few encoding methods, that transform a multiple sequence alignment in a matrix. Most of the encoding methods are not defined on an alignment, but on sequences alone. However, to represent these sequences they often need to have the same length, and for models to learn anything meaningful the values in features should encode the same information across sequences. Therefore, prior to the encoding methods described below the sequences often need to be aligned to each other so that a specific position in a sequence is homologous to that position in all other training sequences.

4.3.1 General purpose encodings

The letters making up biological sequences are a form of categorical data. This type of variable is not specific to biology and as such, there exists many encoding schemes398 to transform categorical variables into numerical vectors.

The most basic, and conceptually simple way to do so is to use the labeling scheme, often called ordinal encoding. Each level of the categorical variable is assigned an integer label. For example, when dealing with DNA sequences, we could have A=1, C=2, G=3 and T=4. This scheme outputs vectors that have the same size as the input sequence and going from the sequence to the encoded vector (and vice versa) is very easy. This encoding scheme has been used to predict resistance levels of HIV to antiviral drugs from sequencing data331. There is, however, a major disadvantage with using this method. As its name indicates, ordinal encoding implies that there is an order to the categorical variable levels (e.g. T>A) which, by definition, does not exist399–401. Another option is to use what I will refer to as binary labeling, where the categorical levels are first assigned an integer label which is then converted to a binary vector. If we use the ordinal DNA encoding from above and convert it to binary vectors we would get: A=\([0,0]\), C=\([0,1]\), G=\([1,0]\) and T=\([1,1]\). This type of representation is frequently used to represent gapless sequences, like \(k\)-mers, in a compressed form402,403 (a character now only needs 2 bits instead of a full byte). For amino acids, since there are more characters, this encoding yields vectors of 5 bits404. Fundamentally, this encoding scheme has the same problem as the ordinal encoding, creating an order that does not exist, although with the order being split into separate values it can mitigate this effect a little bit.

One of the most widely used categorical encoding schemes, One-Hot encoding (OHE) (sometimes called orthonormal encoding405), does not have this ordering issue. The way OHE works is by creating a sparse binary vector of length \(d\) to represent a variable with \(d\) levels (for DNA \(d=4\)). If the ith level of the categorical variable is to be encoded, then the ith position in the vector is set to 1 and the rest set to 0. For example, if we consider that A is the first level of our variable then OHE would yield the following vector: \([1,0,0,0]\). This encoding scheme has been used from the 1980’s406 to now407, and is the scheme used in Chapter 6. The performance of OHE can be on par with ordinal encoding408, but it is easily interpretable, which is often very important in biology since there is a one to one correspondence between a categorical value and a numerical feature. The main problem with OHE is that it tends to increase the number of features quite a lot, since the encoded vector representation of a length \(n\) sequence is of length \(n\times d\). An example comparing Ordinal, Binary and One-Hot encodings can be seen in Figure 4.4.

![**Example of 3 general categorical encoding schemes.**

Two sequences, `ATCG` and `TAAT` are shown encoded in three different encoding schemes: ordinal, binary and one-hot encoding. In the ordinal encoding, each character is assigned an integer value, here A=0, C=1, G=3 and T=4. In the binary encoding, these integer values are encoded with 2 bits. In the one-hot encoding scheme, a character corresponds to a sparse vector indicating which level of the variable is present: here A=[1,0,0,0]. Ordinal encoding preserves the dimension of the sequence while binary and one-hot encoding result in vectors with a bigger dimension than the original sequence.](figures/Encode-seqs/General-purpose.png)

Figure 4.4: Example of 3 general categorical encoding schemes.

Two sequences, ATCG and TAAT are shown encoded in three different encoding schemes: ordinal, binary and one-hot encoding. In the ordinal encoding, each character is assigned an integer value, here A=0, C=1, G=3 and T=4. In the binary encoding, these integer values are encoded with 2 bits. In the one-hot encoding scheme, a character corresponds to a sparse vector indicating which level of the variable is present: here A=[1,0,0,0]. Ordinal encoding preserves the dimension of the sequence while binary and one-hot encoding result in vectors with a bigger dimension than the original sequence.

These three general purpose encodings are but some of many398, and since categorical variables are often used in machine learning applications, these encodings are often available in widely used software libraries409.

4.3.2 Biological sequence-specific encodings

While the general-purpose encoding schemes presented above work well enough in practice, some specific encoding methods were developed to include some biological information in the sequence encodings that hopefully machine learning models will be able to leverage during training. These encodings have mostly been developed for encoding protein sequences, using physicochemical properties of amino acids404.

AAIndex410 is a public database containing amino acid indices, i.e. sets of 20 numerical values (one for each AA) measuring some physicochemical property. There is a wide range of these indices, from hydrophobicity to flexibility or residue volume measures. By selecting an informative subset of 9 of these measures411, an amino acid can be represented by a length 9 numerical vector. In some cases, amino acids can be represented by all the 566 properties of AAIndex, and through PCA the dimension of the resulting numerical vectors can be reduced412. This biological sequence specific encoding has been implemented in a software library for biological sequence encoding413.

Another biological sequence-specific encoding is based on the Amino Acid classification Venn diagram defined by Taylor in 1986414, which groups amino acids into eight different groups based on physicochemical properties: aliphatic, aromatic, hydrophobic, polar, charged, positive, small and tiny. With this classification, a single amino acid can be represented by a vector of length 8, each element representing a group, set to one when the amino acid belongs to the group and to zero when it does not. This encoding method was used as early as 1987 to predict secondary structures of proteins415. Later on, another five groups were proposed and used to encode each amino acid with longer vectors416.

A third encoding method, named BLOMAP417, encodes sequences based on values from the BLOSUM62 substitution matrix presented in Section 2.1.3. BLOMAP is defined by using a non-linear projection algorithm to generate vectors of length five, that capture the similarity measures contained in BLOSUM62. This encoding has been used to successfully predict cleavage sites of the HIV-1 protease405,417 (c.f. Section 5.3.2.2). Other encodings such as OETMAP418 have been derived from BLOMAP.

These three encodings are far from being the only ones specific to biological sequence. Many other encoding schemes were developed to learn from this type of sequence data. Some schemes do not encode positional data, and as such, can be applied to unaligned sequences. The simplest of these would be to represent a sequence by its amino acid, or \(k\)-mer frequencies. The latter is often referred to as \(n\)-gram encoding419 and widely used, although with very short \(k\)-mers since the dimension of the encoding grows exponentially with \(k\). With 20 amino acids, this encoding results in vectors that have a length of \(20^k\). Other encoding schemes use codon information to encode amino acids. One such scheme was proposed in404, where an amino acid is represented by a directed graph where vertices are nucleotides and edges represent paths needed to represent codons that code for that amino acid. This graph can then be converted to a 16-dimensional vector by flattening the corresponding adjacency matrix and used as an encoding method.

During the work that led to Chapter 6, several encoding methods were tested: Ordinal, Binary, OHE, AAIndex and Group encodings. The same two training sets of sequences were encoded using each of these methods, and 10 RF models were trained on each of the encoded datasets, om a binary classification task. Accuracy, precision and recall metrics were used to evaluate the performance of the RF on each encoded dataset. According to these metrics, the RF model had the best performance on the datasets encoded with OHE. OHE, also has the advantage of being more easily interpretable. As such, it was chosen for the work presented in Chapter 6.

Other encodings have been used to convert a biological sequence into a single real value. An encoding method based on chaos game theory420 allows for a bijective mapping between the DNA sequence set and the real numbers set. This encoding is not specific to alignments and can be used to do alignment-free comparisons, as such it has been used often in bioinformatics applications421. Recently, this encoding scheme has been used to classify SARS-CoV2 sequences422, predict anti-microbial resistance from sequence data345 and for phylogenetic analysis423.

In recent years algorithmic developments, computing power increase and the massive amounts of available data have made deep learning methods useful, possible to train and very popular. This has given rise to new sequence encoding methods, that are learned on the training data. These are often referred to as embeddings rather than encodings. Since these learned embeddings are not used in Chapter 6, for the sake of thematic coherence I will not be mentioning them here. I will, however, go over these embedding methods shortly in Chapter 7.

4.4 Conclusion

Alignments, and the sequences within them, are rich sources of information, that have long been exploited widely for many different types of analyses. With the rise of machine learning in the last years, it is logical that machine learning models have been applied more and more frequently to biological sequence data. Machine Learning is a wide field with many different methods and paradigms. Even simple methods like linear regression or naive Bayes can be very useful, and more complex models like random forests have been able to make very good predictions on biological data. The model is not the only variable to take into account when looking to apply machine learning methods on sequence data. Different encoding methods will yield different vector representations, with different characteristics and applications. Special care must therefore be given to the choice of biological sequence encoding scheme, prior to starting a machine learning analysis.